# LAMMPS on Taiwania3

## version

2021/1/20. patch_24Dec2020-106-g102a6eb

2021/1/28. patch_24Dec2020-194-g364727a

2021/1/29. patch_24Dec2020-208-ga77bb30

2021/2/2. patch_24Dec2020-326-g1a7cb46

2021/2/5. Update example script

2021/3/1. patch_10Feb2021-211-g9efc831

2021/3/24. patch_10Mar2021-137-g73b9f22

## compiler toolchain

For version before patch_24Dec2020-326-g1a7cb46

```

module load intel/2020 cmake/3.15.4 hdf5/1.12.0_intelmpi-19.1.3.304 netcdf/4.7.4-hdf5-1.12.0_intelmpi-19.1.3.304

```

For version after patch_10Feb2021-211-g9efc831

```

module load compiler/intel/2021 IntelMPI/2021 hdf5/1.12 netcdf/4.7.4 adios2/2.7.1

```

## compiler recipe

```

# intel_cpu_intelmpi = USER-INTEL package, Intel MPI, MKL FFT

SHELL = /bin/sh

# ---------------------------------------------------------------------

# compiler/linker settings

# specify flags and libraries needed for your compiler

CC = mpiicpc -std=c++11

OPTFLAGS = -xHost -O2 -fp-model fast=2 -no-prec-div -qoverride-limits \

-qopt-zmm-usage=high

CCFLAGS = -qopenmp -qno-offload -ansi-alias -restrict \

-DLMP_INTEL_USELRT -DLMP_USE_MKL_RNG $(OPTFLAGS) \

-I$(MKLROOT)/include

SHFLAGS = -fPIC

DEPFLAGS = -M

LINK = mpiicpc -std=c++11

LINKFLAGS = -qopenmp $(OPTFLAGS) -L$(MKLROOT)/lib/intel64/

LIB = -ltbbmalloc -lmkl_intel_ilp64 -lmkl_sequential -lmkl_core

SIZE = size

ARCHIVE = ar

ARFLAGS = -rc

SHLIBFLAGS = -shared

# ---------------------------------------------------------------------

# LAMMPS-specific settings, all OPTIONAL

# specify settings for LAMMPS features you will use

# if you change any -D setting, do full re-compile after "make clean"

# LAMMPS ifdef settings

# see possible settings in Section 3.5 of the manual

LMP_INC = -DLAMMPS_GZIP -DLAMMPS_BIGBIG -DLAMMPS_PNG

# MPI library

# see discussion in Section 3.4 of the manual

# MPI wrapper compiler/linker can provide this info

# can point to dummy MPI library in src/STUBS as in Makefile.serial

# use -D MPICH and OMPI settings in INC to avoid C++ lib conflicts

# INC = path for mpi.h, MPI compiler settings

# PATH = path for MPI library

# LIB = name of MPI library

MPI_INC = -DMPICH_SKIP_MPICXX -DOMPI_SKIP_MPICXX=1

MPI_PATH =

MPI_LIB =

# FFT library

# see discussion in Section 3.5.2 of manual

# can be left blank to use provided KISS FFT library

# INC = -DFFT setting, e.g. -DFFT_FFTW, FFT compiler settings

# PATH = path for FFT library

# LIB = name of FFT library

FFT_INC = -DFFT_MKL -DFFT_SINGLE

FFT_PATH =

FFT_LIB =

# JPEG and/or PNG library

# see discussion in Section 3.5.4 of manual

# only needed if -DLAMMPS_JPEG or -DLAMMPS_PNG listed with LMP_INC

# INC = path(s) for jpeglib.h and/or png.h

# PATH = path(s) for JPEG library and/or PNG library

# LIB = name(s) of JPEG library and/or PNG library

JPG_INC = -I/usr/local/include

JPG_PATH = -L/usr/lib64

JPG_LIB = -lpng

# ---------------------------------------------------------------------

# build rules and dependencies

# do not edit this section

include Makefile.package.settings

include Makefile.package

EXTRA_INC = $(LMP_INC) $(PKG_INC) $(MPI_INC) $(FFT_INC) $(JPG_INC) $(PKG_SYSINC)

EXTRA_PATH = $(PKG_PATH) $(MPI_PATH) $(FFT_PATH) $(JPG_PATH) $(PKG_SYSPATH)

EXTRA_LIB = $(PKG_LIB) $(MPI_LIB) $(FFT_LIB) $(JPG_LIB) $(PKG_SYSLIB)

EXTRA_CPP_DEPENDS = $(PKG_CPP_DEPENDS)

EXTRA_LINK_DEPENDS = $(PKG_LINK_DEPENDS)

# Path to src files

vpath %.cpp ..

vpath %.h ..

# Link target

$(EXE): main.o $(LMPLIB) $(EXTRA_LINK_DEPENDS)

$(LINK) $(LINKFLAGS) main.o $(EXTRA_PATH) $(LMPLINK) $(EXTRA_LIB) $(LIB) -o $@

$(SIZE) $@

# Library targets

$(ARLIB): $(OBJ) $(EXTRA_LINK_DEPENDS)

@rm -f ../$(ARLIB)

$(ARCHIVE) $(ARFLAGS) ../$(ARLIB) $(OBJ)

@rm -f $(ARLIB)

@ln -s ../$(ARLIB) $(ARLIB)

$(SHLIB): $(OBJ) $(EXTRA_LINK_DEPENDS)

$(CC) $(CCFLAGS) $(SHFLAGS) $(SHLIBFLAGS) $(EXTRA_PATH) -o ../$(SHLIB) \

$(OBJ) $(EXTRA_LIB) $(LIB)

@rm -f $(SHLIB)

@ln -s ../$(SHLIB) $(SHLIB)

# Compilation rules

%.o:%.cpp

$(CC) $(CCFLAGS) $(SHFLAGS) $(EXTRA_INC) -c $<

# Individual dependencies

depend : fastdep.exe $(SRC)

@./fastdep.exe $(EXTRA_INC) -- $^ > .depend || exit 1

fastdep.exe: ../DEPEND/fastdep.c

cc -O -o $@ $<

sinclude .depend

```

### note

1. install ccache to speed up compiler progress.

2. install zeromq(login/computing node), zeromq-del(login node) for message package.

3. cslib現在無法在-DLAMMPS_BIGBIG flag下作用。

4. install voro++(login/computing node), voro++-devel(login node) for VORONOI.

5. molfile package 需要libdl.so,這包在glibc,這應該都有裝。

6. 修改lib內自帶makefile時盡量建立副本`cp Makefile Makefile.icc`,再用`make -f Makefile.icc`來編,避免git pull 會遇到的衝突問題。

7. python package需要在lib/python手動將Makefile.lammps.python3取代Makefile.lammps,才會正確使用系統的python3 interpreter。

8. 安裝message時要先編譯自帶的cslib,需要手動在Makefile的CCFLAGS加入--std=c++11,不然會有`identifier "nullptr" is undefined`的問題。

9. git pull 之後記得在src下`make pu && make ps`更新挑選的套件和程式碼,再重新編譯。

10. `git describe --tags`可以查看現在的版本。

11. make clean-all

12. make intel_cpu_intelmpi -j 28

## exe path

initial build

/opt/ohpc/pkg/lammps/patch_24Dec2020-106-g102a6eb/lmp_intel_cpu_intelmpi

add vororoni package

/opt/ohpc/pkg/lammps/patch_24Dec2020-194-g364727a/lmp_intel_cpu_intelmpi

add meam, user-intel, user-omp, molfile, python3 package

/opt/ohpc/pkg/lammps/patch_24Dec2020-208-ga77bb30/lmp_intel_cpu_intelmpi_p3

add meam, user-intel, user-omp, molfile, python2 package

/opt/ohpc/pkg/lammps/patch_24Dec2020-208-ga77bb30/lmp_intel_cpu_intelmpi_p2

add netcdf, hdf5 package

/opt/ohpc/pkg/lammps/patch_24Dec2020-208-ga77bb30/lmp_intel_cpu_intelmpi

small update, latest

/opt/ohpc/pkg/lammps/patch_24Dec2020-326-g1a7cb46/lmp_intel_cpu_intelmpi_p2

/opt/ohpc/pkg/lammps/patch_24Dec2020-326-g1a7cb46/lmp_intel_cpu_intelmpi_p3

add user-adios and update toolchain

/opt/ohpc/pkg/lammps/patch_10Feb2021-211-g9efc831/lmp_intel_cpu_intelmpi

update toolchain

/opt/ohpc/pkg/lammps/patch_10Mar2021-137-g73b9f22/lmp_intel_cpu_intelmpi

## modulefile path

not set yet

## input file

```

# 3d Lennard-Jones melt

variable x index 1

variable y index 1

variable z index 1

variable xx equal 20*$x

variable yy equal 20*$y

variable zz equal 20*$z

units lj

atom_style atomic

lattice fcc 0.8442

region box block 0 ${xx} 0 ${yy} 0 ${zz}

create_box 1 box

create_atoms 1 box

mass 1 1.0

velocity all create 1.44 87287 loop geom

pair_style lj/cut 2.5

pair_coeff 1 1 1.0 1.0 2.5

neighbor 0.3 bin

neigh_modify delay 0 every 20 check no

fix 1 all nve

run 100

```

## basic submition script

```

#!/bin/bash

#SBATCH --job-name lammps_test # Job name

#SBATCH --output %x-%j.out # Name of stdout output file (%x expands to jobname, %j expands to jobId)

####Step 1: Selection of Nodes

###SBATCH --nodelist=gn[0103-0105,107].twcc.ai #Request a specific list of hosts

#SBATCH --nodes=4 #Controls the number of nodes allocated to the job

###SBATCH --ntasks=8 #Controls the number of tasks to be created for the job

#SBATCH --cpus-per-task=1 #Controls the number of CPUs allocated per task

#SBATCH --ntasks-per-node=56 #Controls the maximum number of tasks per allocated node

###SBATCH --ntasks-per-core #Controls the maximum number of tasks per allocated core

###SBATCH --ntasks-per-socket #Controls the maximum number of tasks per allocated socket

###Step 2: Allocation of CPUs from the selected Nodes

###SBATCH --distribution=block:cyclic #before the ":" controls the sequence in which tasks are distributed to each of the selected nodes.

#after the ":" controls the sequence in which tasks are distributed to sockets within a node.

###SBATCH --time 24:00:00 # Run time (hh:mm:ss) - 1.5 hours

#SBATCH --partition test

#SBATCH --account GOV108018

module purge

###module load intel/2020 hdf5/1.12.0_intelmpi-19.1.3.304 netcdf/4.7.4-hdf5-1.12.0_intelmpi-19.1.3.304

module load compiler/intel/2021 IntelMPI/2021 hdf5/1.12 netcdf/4.7.4 adios2/2.7.1

### force to adopt OFA

export I_MPI_FABRICS=shm:ofi

export UCX_TLS=rc,ud,sm,self

### set processor management librery

export I_MPI_PMI_LIBRARY=/usr/lib64/libpmi2.so

### set debug level, 0:no debug info

export I_MPI_DEBUG=10

###

export I_MPI_HYDRA_BOOTSTRAP=slurm

### set cpu binding

export I_MPI_PIN=1

###export EXE=/opt/ohpc/pkg/lammps/patch_24Dec2020-208-ga77bb30/lmp_intel_cpu_intelmpi

export EXE=/opt/ohpc/pkg/lammps/patch_10Feb2021-211-g9efc831/lmp_intel_cpu_intelmpi

echo "Running on hosts: $SLURM_NODELIST"

echo "Running on $SLURM_NNODES nodes."

echo "Running $SLURM_NTASKS tasks."

echo "$SLURM_MPI_TYPE"

SUBMIT_FILE=`scontrol show job $SLURM_JOB_ID | grep "Command=" | awk 'BEGIN {FS="="}; {print $2}'`

#echo $SUBMIT_FILE

#echo ${SUBMIT_FILE##/*/}

#echo "$SLURM_SUBMIT_DIR/$SLURM_JOB_ID.debug"

cp $SUBMIT_FILE $SLURM_SUBMIT_DIR/$SLURM_JOB_ID.debug

#mpiexec.hydra $EXE -sf hybrid intel omp -nocite -var x 20 -var y 35 -var z 40 -in in.lj

#mpiexec.hydra -bootstrap slurm -n $SLURM_NTASKS $EXE -sf hybrid intel omp -nocite -var x 12 -var y 21 -var z 24 -in in.lj

mpiexec.hydra -n $SLURM_NTASKS $EXE -sf hybrid intel omp -nocite -var x 12 -var y 21 -var z 24 -in in.lj

#srun -n $SLURM_NTASKS $EXE -sf hybrid intel omp -nocite -var x 3 -var y 21 -var z 24 -in in.lj

echo "Your LAMMPS job completed at `date` "

```

### Command-line options

#### -nocite

Disable writing the log.cite file

#### -suffix style args

LAMMPS currently has acceleration support for three kinds of hardware, via the listed packages:

| Many-core CPUs | USER-INTEL, KOKKOS, USER-OMP, OPT packages |

| :--------: | :--------: |

| NVIDIA/AMD GPUs | GPU, KOKKOS packages |

| Intel Phi/AVX | USER-INTEL, KOKKOS packages |

`-sf intel`:

use styles from the USER-INTEL package

`-sf opt`:

use styles from the OPT package

`-sf omp`:

use styles from the USER-OMP package

`-sf hybrid intel omp`:

use styles from the USER-INTEL package if they are installed and available, but styles for the USER-OMP package otherwise.

`-sf hybrid intel opt`:

## Running many sequential jobs in parallel using job arrays

```

#!/bin/bash

#SBATCH --job-name sja # Job name

#SBATCH --output %x-%A_%a.out # Name of stdout output file (%x expands to jobname, %j expands to jobId)

####Step 1: Selection of Nodes

###SBATCH --nodelist=gn[0103-0105,107].twcc.ai #Request a specific list of hosts

#SBATCH --nodes=1 #Controls the number of nodes allocated to the job

###SBATCH --ntasks=8 #Controls the number of tasks to be created for the job

###SBATCH --cpus-per-task=1 #Controls the number of CPUs allocated per task

###SBATCH --ntasks-per-node=4 #Controls the maximum number of tasks per allocated node

###SBATCH --ntasks-per-core #Controls the maximum number of tasks per allocated core

###SBATCH --ntasks-per-socket #Controls the maximum number of tasks per allocated socket

#SBATCH --array=0-3

###Step 2: Allocation of CPUs from the selected Nodes

#SBATCH --distribution=block:cyclic #before the ":" controls the sequence in which tasks are distributed to each of the selected nodes.

#after the ":" controls the sequence in which tasks are distributed to sockets within a node.

###SBATCH --time 24:00:00 # Run time (hh:mm:ss) - 1.5 hours

#SBATCH --partition gpu

#SBATCH --account GOV109199

module purge

echo "Running on hosts: $SLURM_NODELIST"

echo "Running on $SLURM_NNODES nodes."

echo "Running $SLURM_NTASKS tasks."

echo "$SLURM_MPI_TYPE"

SUBMIT_FILE=`scontrol show job $SLURM_JOB_ID | grep "Command=" | awk 'BEGIN {FS="="}; {print $2}'`

#echo $SUBMIT_FILE

#echo ${SUBMIT_FILE##/*/}

#echo "$SLURM_SUBMIT_DIR/$SLURM_JOB_ID.debug"

#cp $SUBMIT_FILE $SLURM_SUBMIT_DIR/$SLURM_JOB_ID.debug

echo "Your job starts at `date`"

python3 Ramanujan.py

echo "Your job completed at `date` "

```

## Packaging smaller parallel jobs into one job script

```

#!/bin/bash

#SBATCH --job-name lammps_test # Job name

#SBATCH --output %x-%j.out # Name of stdout output file (%x expands to jobname, %j expands to jobId)

####Step 1: Selection of Nodes

###SBATCH --nodelist=gn[0103-0105,107].twcc.ai #Request a specific list of hosts

#SBATCH --nodes=8 #Controls the number of nodes allocated to the job

###SBATCH --ntasks=8 #Controls the number of tasks to be created for the job

#SBATCH --cpus-per-task=1 #Controls the number of CPUs allocated per task

#SBATCH --ntasks-per-node=56 #Controls the maximum number of tasks per allocated node

###SBATCH --ntasks-per-core #Controls the maximum number of tasks per allocated core

###SBATCH --ntasks-per-socket #Controls the maximum number of tasks per allocated socket

###Step 2: Allocation of CPUs from the selected Nodes

###SBATCH --distribution=block:cyclic #before the ":" controls the sequence in which tasks are distributed to each of the selected nodes.

#after the ":" controls the sequence in which tasks are distributed to sockets within a node.

###SBATCH --time 24:00:00 # Run time (hh:mm:ss) - 1.5 hours

#SBATCH --partition test

#SBATCH --account GOV109199

module purge

module load intel/19.1.3.304

export I_MPI_FABRICS=shm:ofi

export UCX_TLS=rc,ud,sm,self

export I_MPI_PMI_LIBRARY=/opt/qct/ohpc/admin/pmix/lib/libpmi2.so

export I_MPI_DEBUG=10

export EXE=/opt/ohpc/pkg/lammps/patch_24Dec2020-106-g102a6eb/lmp_intel_cpu_intelmpi

# When running a large number of tasks simultaneously, it may be

# necessary to increase the user process limit.

ulimit -u 10000

echo "Running on hosts: $SLURM_NODELIST"

echo "Running on $SLURM_NNODES nodes."

echo "Running $SLURM_NTASKS tasks."

echo "Your LAMMPS job starts at `date`"

mpiexec.hydra $EXE -sf hybrid intel omp -nocite -var x 20 -var y 35 -var z 40 -in in.lj

echo "Your LAMMPS job completed at `date` "

```

## MPI-IO test

problem size:

286720000 atoms

conputing resource:

160 nodes (8960 cores)

lammps command:

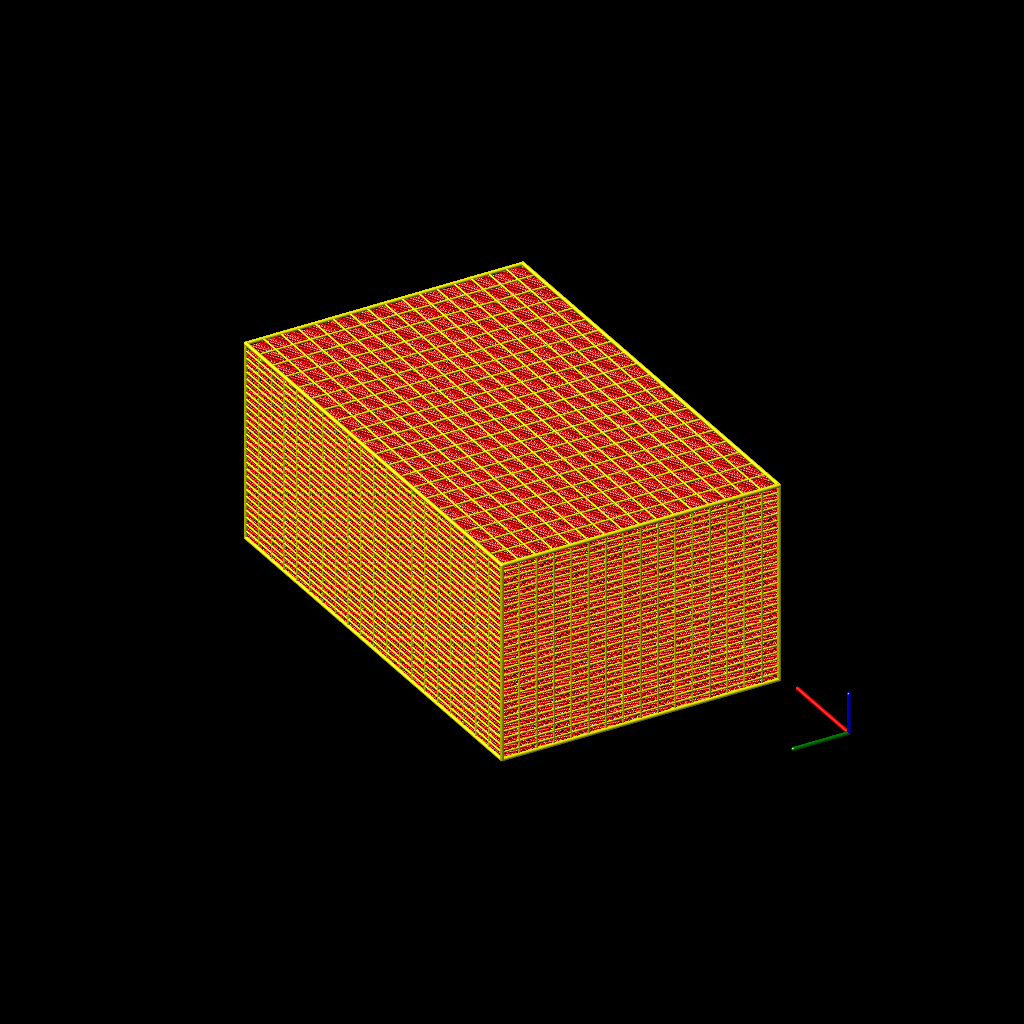

write_dump all image initial.png type type view 60 150 axes yes 0.2 0.02 subbox yes 0.01 size 1024 1024

subbox yes 0.01這選項可以把空間如何切分給視覺化

想像xy平面平鋪於桌面,z軸垂直於桌面, view 60 150 第一個60是xy平面的仰角,第二個150是以z為中心做旋轉。

dump variable:

mass type xs ys zs element

4 float64, 1 integer, 1 string

4 * 8bytes+1 * 4bytes+1 * 4bytes=40bytes

286,720,000 * 40=11,468,800,000bytes=11,200,000KB

| dump type | size | Access time | Modify time | time |

| -------- | -------- | -------- |-------- |-------- |

| image | 300 KB | 02:37:04 | 02:37:05 | 1s |

| cfg/mpiio.gz | 11,095,363 KB | 02:37:05 | 02:37:35 | 30s |

| cfg | 11,095,363 KB | 02:37:58 | 02:38:04 | 6s |

| cfg/gz | 790,966 KB | 02:38:04 | 03:10:00 | 31m56s |

| cfg/mpiio.bin | 11,095,363 KB | 03:10:00 | 03:10:31 | 31s |

ps1. You can use the “.bin” suffix described below in an MPI-IO dump file;

A binary dump file will be about the same size as a text version, but will typically write out much faster.

### formating sacct

sacct --format="JobID,JobName%30,Partition,Account,AllocCPUS,State,ExitCode"

### Large Scale simulation consideration

## mpi

### intelmpi

#### Reduce initialization times

If all ranks work on the same Intel Architecture generation, switch off the platform check:

`I_MPI_PLATFORM_CHECK=0`

Specify the processor architecture being used to tune the collective operations:

`I_MPI_PLATFORM=uniform`

Alternative PMI data exchange algorithm can help to speed up the startup phase:

`I_MPI_HYDRA_PMI_CONNECT=alltoall`

Customizing the branching may also help startup times (default is 32 for over 127 nodes):

`I_MPI_HYDRA_BRANCH_COUNT=<n>`

### openmpi

## Queuing system

### slurm

Specifies that the batch job should never be requeued under any circumstances.

`#SBATCH --no-requeue`

### pbspro