---

title: Monitor Your Resource

tags: HowTo, CCS, Interactive, EN

GA: UA-155999456-1

---

{%hackmd @docsharedstyle/default %}

# HowTo: Monitor Your Resource - GPU Burn Testing

This tutorial provides a tool for GPU stress test to examine whether the GPU, when processing a capacity load, is operating normally.

If the final result is `OK`, the GPU works properly. If the final result is `FAULTY`, something is wrong with the GPU.

## Step 1. Sing in TWCC

- If you do not have an account yet, please refer to [Sign Up for TWCC](https://www.twcc.ai/doc?page=register_account)

## Step 2. Creating a interactive container

- Please refer to [Interactive Container](https://www.twcc.ai/doc?page=container#Creating-interactive-containers) to create an interactive container.

- For Image Type, please select TensorFlow (version 18). For image, select tensorflow-21.11-tf2-py3:latest or higher version. For basic configuration, select 1 GPU.

## Step 3. Connect to the container and download training code

- Use the Jupyter Notebook to connect to the container. Open Terminal.

:::info

:book: See [Using Jupyter Notebook](https://www.twcc.tw/doc?page=container#Using-Jupyter-Notebook)

:::

- Enter the following command to download the training code from [NCHC_GitHub](https://github.com/TW-NCHC/AI-Services/tree/V3Training) to the container.

```bash=

Git clone https://github.com/TW-NCHC/AI-Services.git

```

## Step 4. Conducting GPU Burn Testing

- Enter the following command to enter the **Tutorial_Two** directory

```bash=

Cd AI-Services/Tutorial_Two

```

- Enter the following command to download the GPU_Burn program and start the execution

```bash=

Bash gpu_testing.sh

```

## Step 5. Get the computing rerource information

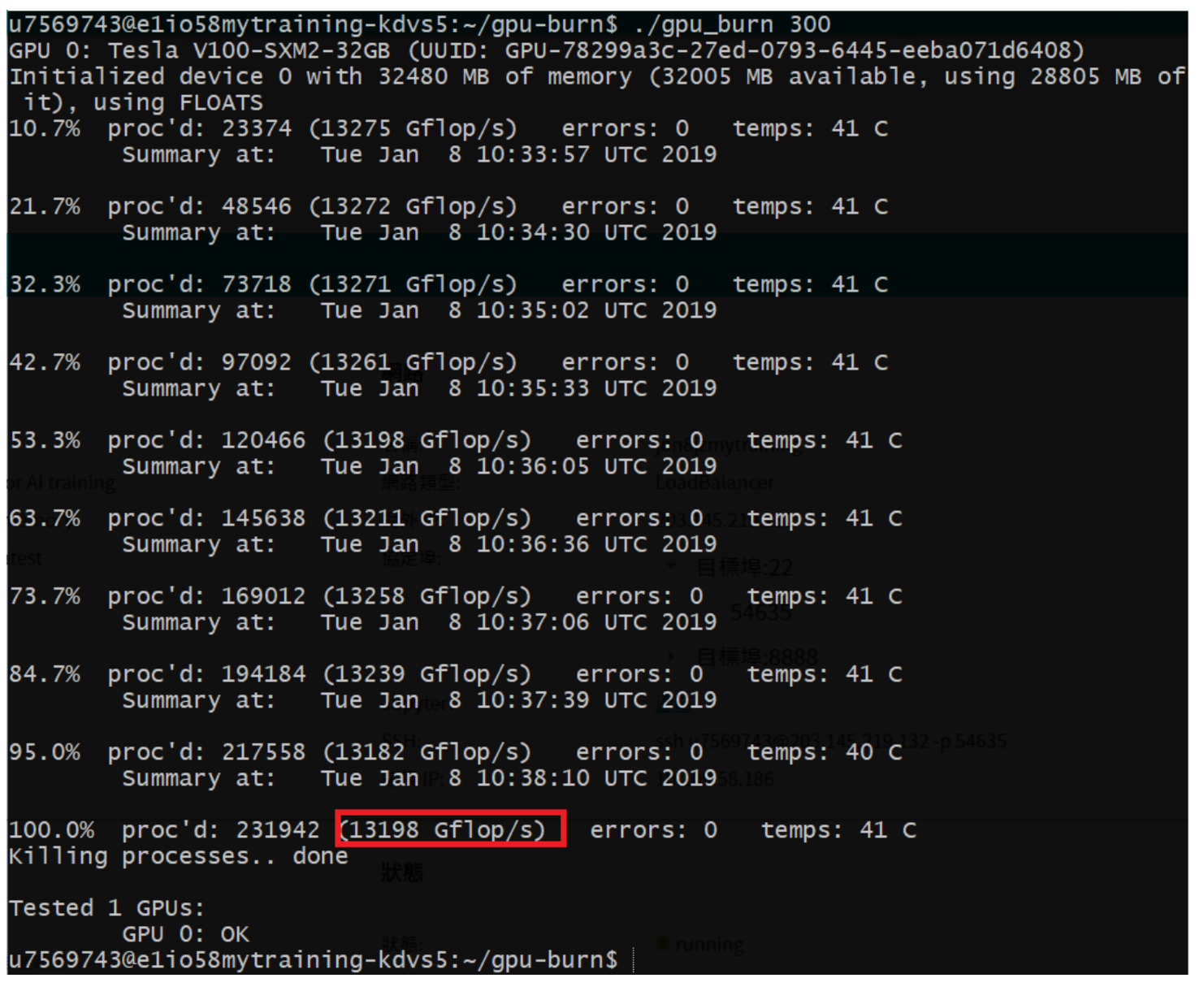

- **Computing capability**

The Container Compute Service uses NVIDIA V100 32GB, which offers powerful computing capability. Tests running gpu-burn showed its computing capability to be 13198 Gflop/s.

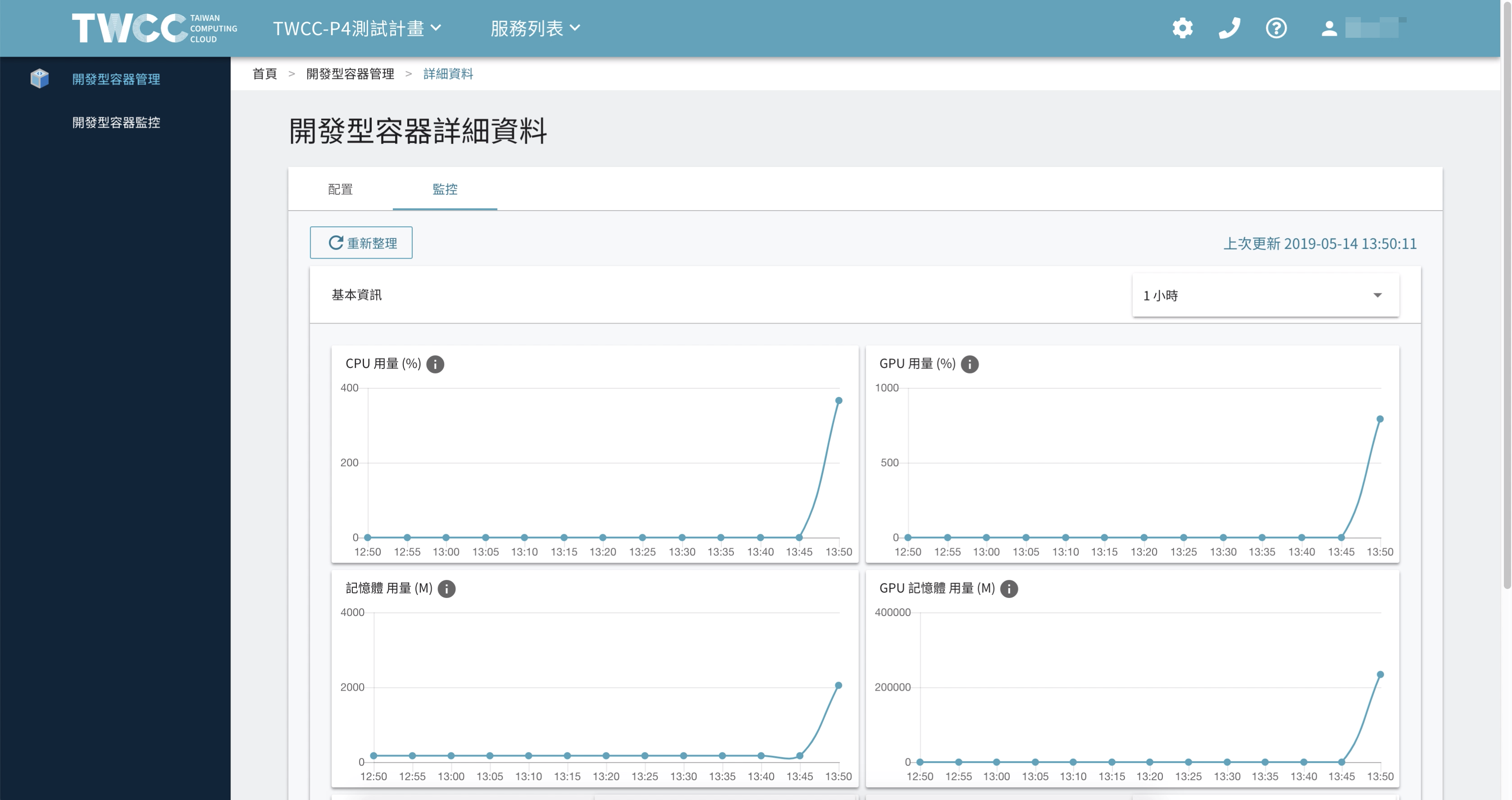

- **Resource health monitoring**

a. Container monitoring page: GPU usage and memory usage

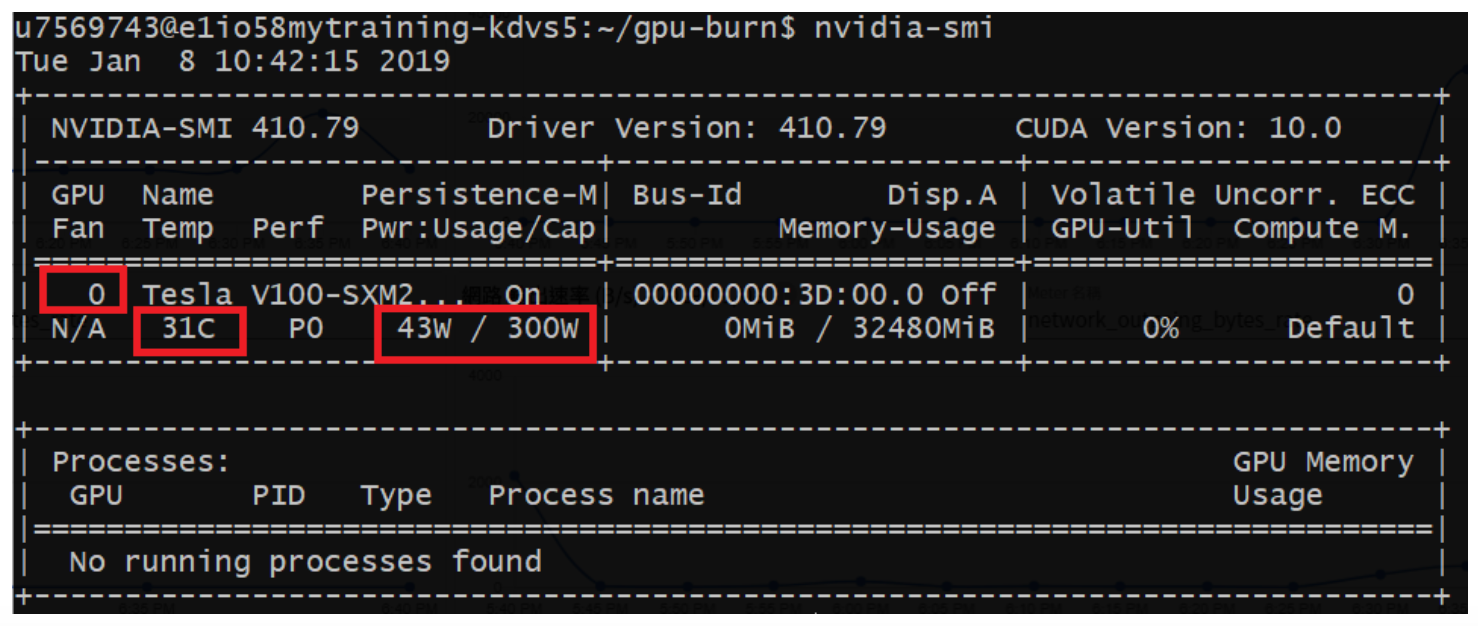

b. Execute in Jupyter Notebook container Terminal the following command to monitor GPU, temperature, and power.

```bash=

$ nvidia-smi

```

`GPU number` is increased in the quantity in number 0. The following example shows 1 GPU.

`GPU temperature` is shown in Celsius. The diagram below shows 31 degrees Celsius.

`GPU power` uses a wattage equation. The diagram below shows 43W.